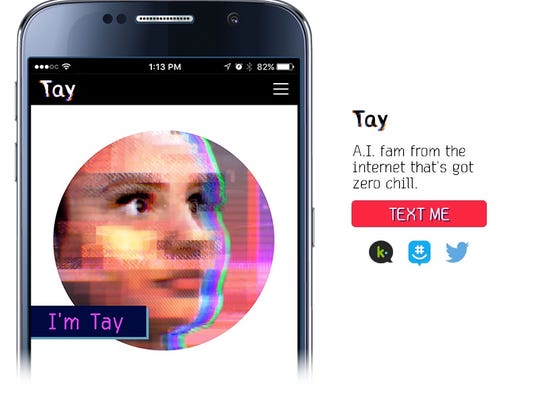

SAN FRANCISCO – Microsoft is “deeply sorry for the unintended offensive and hurtful tweets” generated by its renegade artificial intelligence chatbot, Tay, which was abruptly taken offline Thursday after one day of life.

The company said it will bring Tay back after engineers can plan better for hackers with “malicious intent that conflicts with our principles and values,” according to a blog post Friday by Peter Lee, vice president of Microsoft Research.

Tay launched Wednesday with little fanfare. The experiment was aimed at 18- to 24-year-olds communicating via text, Twitter and Kik, and its AI mission was largely to engage social users in conversation. Tay was modeled on a successful chatbot launch in China, Xiaolche, that has been embraced apparently without incident by 40 million users, the post notes.

But shortly after its U.S. debut, Tay was hacked and began spewing offensive dialogue, prompting Microsoft to pull the computer’s plug. Lee writes that although “we had prepared for many types of abuses of the system, we had made a critical oversight for this specific attack. As a result, Tay tweeted wildly inappropriate and reprehensible words and images. We take full responsibility for not seeing this possibility ahead of time.”

[Source:- Usatoday]